Evidence, findings and raison d’etre.

Steve Huntoon is the principal attorney in the Washington, D.C. law practice Energy Counsel, LLP, having over 30 years’ experience in energy regulatory law, dealing with issues such as regional RTO capacity markets, wind projects, LNG regulation, industry restructuring, and retail choice, and representing many leading energy companies, including Dynegy, Exelon, and NextEra. He has testified before federal and state regulatory agencies, published numerous industry papers, and is a former President of the Energy Bar Association.

Many years into mandatory reliability standards for the Bulk Power System (BPS), it is time to ask some basic questions: Has this statutory scheme materially improved reliability? And if so with what value, and at what cost?

The answers to these questions are not what one might expect. Instead, the available evidence suggests a troubling set of findings:

- Impact. Mandatory reliability standards have had little measurable impact on reliability.

- Value. Load loss reduction and its value have been small.

- Effectiveness. Relatively few outages can be avoided/reduced by reliability standards.

- Cost. Mandatory reliability standards are not "free." There are costs of infrastructure, and potential adverse consequences.

- Implications. We should focus more on what causes outages and work backwards, applying true cost-benefit analysis.

NERC's Claims

In considering whether mandatory reliability standards have materially improved reliability we might begin with what the North American Electric Reliability Corporation (NERC) has to say. After all, NERC develops and enforces the standards, albeit under the oversight of the U.S. Federal Energy Regulatory Commission (FERC).

NERC produces reams of data about reliability, but its clearest empirical claim for improved reliability would appear to be a claimed reduction in non-weather-related significant outages due to transmission-related events. NERC says:1

... the number of BPS transmission-related events resulting in loss of firm load, other than events caused by factors external to the transmission system's actual performance (i.e., weather-initiated events), decreased from an average of ten per year over a ten-year period (2002 - 2011) to seven in 2013.

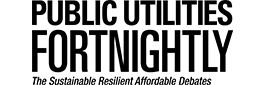

Figure 1 - Significant Outage Events 2002-2013

Figure 1 - Significant Outage Events 2002-2013

The data NERC is relying on is reprinted in Figure 1. NERC is saying that significant outages (load-loss "events") from 2002 to 2013 averaged ten per year, while the number of significant events in 2013 was only seven. In other words, the number of significant outage events seen during 2013, the most recent year for which data is available, was just a bit less - by the difference of three (ten minus seven) - than the yearly average recorded for the prior 12-year period, inclusive.

Let us assume that NERC is correct - that mandatory reliability standards have reduced annual occurrence of significant outage events by this difference of three. What is that worth?

As it happens, this value can be estimated. To do so, we can assume that the average firm load loss per outage is 1,200 MW, and that the average outage duration is 2.9 hours.2

So at three avoided outages per year, the annual reduction in outage MWh is 1,200 MW of average firm load loss x 2.9 hours of average outage duration x 3 avoided outages=10,440 MWh.

Figure 2 - Causes of Transmission Outage Events 2009-2013

Figure 2 - Causes of Transmission Outage Events 2009-2013

And what is the value of a 10,440 MWh reduction in firm load loss? Applying a FERC-accepted Value of Lost Load (VOLL) of $3,500/MWh,3 the annual value of the avoided load loss is $36.54 million (10,440 MWh x $3,500/MWh).

In the grand scheme of things that is pretty small stuff. By way of comparison, the annual budget for statutory functions for NERC and its Regional Entities is $184,777,000.4 Compliance costs incurred each year by the Registered Entities (those subject to the standards) presumably would total a multiple of this amount, though solid data is lacking.

Comparing $36 million of benefit against hundreds of millions of costs begs the question of the value proposition.5

We might also ask what it would mean, in a perfect world, to somehow eliminate all seven of the significant outages that occurred in 2013. To answer, we can estimate that eliminating all non-weather firm load losses in 2013 would have been worth only $85 million (7 outages x 1,200/MW x 2.9/hours x $3,500 VOLL). More small stuff.

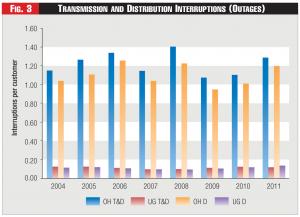

Figure 3 - Transmission and Distribution Interruptions (Outages)

Figure 3 - Transmission and Distribution Interruptions (Outages)

And is even this small stuff attainable? Alas, no. The bulk of transmission circuit outages are beyond the influence of standards. The sobering data can be seen in Figure 2.6

NERC data show that the vast majority of causes of transmission outage events (e.g., lightning, other weather, human error, equipment failure, foreign interference) is completely or mostly beyond the influence of reliability standards.

So, hypothetically, there is little more that could be done. Realistically, even less.

Meanwhile, not only are the bulk of transmission circuit outages beyond the influence of reliability standards, but the vast bulk of all service interruptions arise on the distribution system - not the bulk transmission grid.

Data from the Edison Electric Institute (EEI) show that transmission system interruptions (outages) are swamped by distribution system outages. This is shown in Figure 3. For a given year, the difference between the System Average Interruption Frequency Index (SAIFI) for overhead distribution (OH D) and overhead transmission and distribution (OH T&D) gives the overhead transmission SAIFI.7 This difference turns out to be very small.

Thus, the vast bulk of customer outages arises on the distribution system, not the transmission system.

Adverse Unintended Consequences

So what is the import of the discussion so far? The value of avoided transmission outages is small and can't get much bigger. The bulk of transmission outages lie beyond the influence of reliability standards. And transmission-related outages themselves are almost insignificant relative to distribution-related outages.

The rejoinder might run as follows: Even if all this is so, what harm could come from reliability standards (other than their cost)? Well, the answer may come as a surprise. In point of fact, there remain certain possible adverse consequences of mandatory reliability standards.

With mandatory standards, the Registered Entities find that their discretion to focus resources on one reliability risk versus another becomes greatly reduced. Any violation of any reliability standard is subject to reporting and to significant penalty. In effect, everything must be treated as a priority. And as the old saying goes, when everything is a priority, nothing is a priority.

This potential adverse consequence may be most serious when it comes to existential threats to the grid, particularly cybersecurity. It is difficult to judge whether the huge institutional lift associated with all other requirements has distracted the industry from the really big cyber threats.

It is also possible that the existence of mandatory reliability standards for the transmission system - and lack of such standards for distribution - may bias resource allocation towards transmission-level risks relative to distribution-level risks. This potential adverse consequence is highlighted by the fact, as shown above in Figure 3, that the vast bulk of outages are distribution-related. We have no way of knowing whether a given level of resources is better spent on transmission-system reliability or distribution-system reliability, and whether that choice is affected by the existence of transmission-level standards.8

The point is this: there may be adverse unintended consequences of mandatory reliability standards beyond the cost of compliance by those charged with, and those subject

to, enforcement.

Why the Cost-Benefit Imbalance?

How have we come to this place, where benefits appear relatively small, costs high, and little more able to be accomplished as a practical matter - with adverse unintended consequences to boot?

The fundamental problem appears to be that standards development has not included any cost-benefit analysis.9 There is no projection of avoided load loss from a potential standard - or the corresponding value of that avoided load loss - against the costs and risks of a standard.10

NERC has developed what it calls a "Cost Effective Analysis Process." The name sounds encouraging. But that process itself is flawed. The need for a standard is assumed; the only question is whether the standard as drafted is reasonable given the assumed need.11

A similar observation about lack of cost-benefit analysis can be made when it comes to the compliance/enforcement regime. There has been no validation of the pervasive audit approach versus, for example, a more limited regime of self-certifications and random audits.

Not Rocket Science

Is it too difficult to make dollars and sense of reliability matters? Not at all. We need look no further than a recent decision of the D.C. Public Service Commission regarding undergrounding of select distribution feeder circuits. First, the decision adopts the ratio of Customer Interruption Minute per dollar of undergrounding investment (CIM/$) as a criterion for determining which distribution feeders to underground. This is a measure of reliability benefit relative to cost. And it also is possible to tease out several key numbers - (1) reliability Value of Service (aka VOLL) of $42 million annually, (2) an investment of $220 million in the subject phase, and (3) a return on investment (ROI) of 7.65% - all of which, when taken together, suggest that a sanity check would be well met ($42 million is more than 7.65% of $220 million).12

Why shouldn't this sort of information - even a rough cut - be a touchstone for all things reliability?

In short, our experience with mandatory reliability standard suggests that the value of load loss reduction has been small. That is not because the standards themselves are somehow wrong, but because the major causes of transmission-related outages have been and remain beyond the influence of standards, and the major causes of outages generally remain lodged at the distribution level.

And against the relatively small upside of these standards we need also to recognize that they do entail substantial costs and may cause adverse unintended consequences.

How did we get here? We've gotten to this point because the standards development process has never included cost-benefit analysis. Yet it is possible to perform that kind of analysis13 - at least as a sanity check - and it is not too late to start.

Endnotes:

1. North American Electric Reliability Corp., "Five-Year Electric Reliability Organization Performance Assessment Report," Docket No. RR14-5-000, pages 2-3 (July 21, 2014) (the NERC measure is similar to the FERC measure in its most recent Strategic Plan, March 2014, page 22).

2. NERC's State of Reliability 2014 report, Figures 4.2 and 4.3.

3. Midwest Independent Transmission System Operator, Inc., 123 FERC ¶ 61,297, at P 106 (2008). VOLL estimates vary widely by source, customer class, duration of outage, etc. (e.g., Ernest Orlando Lawrence Berkeley National Laboratory, "Estimated Value of Service Reliability for Electric Utility Customers in the United States," June 2009), but the discussion here is valid across a wide range of VOLL's.

4. North American Electric Reliability Corp., "Request for Acceptance of 2015 Business Plans and Budgets of NERC and Regional Entities and for Approval of Proposed Assessments to Fund Budgets," Docket No. RR14-6-000, Att. 1 (Aug. 22, 2014).

5. The governing statute provides little guidance on what the value proposition should be, but the overall statutory direction is broad. Under Section 215(d)(2) of the Federal Power Act the Commission may approve a proposed reliability standard "... if it determines that the standard is just, reasonable, not unduly discriminatory or preferential, and in the public interest." This broad language gives the Commission plenty of room to consider the benefits and costs of a proposed standard.

6. Transmission Availability Data System (TADS) Outage Events by Initiating Cause Code (ICC), from NERC's State of Reliability 2014 report, Table 3.1.

7. Underground (UG) transmission and distribution SAIFI data are not relevant to this discussion because the number of such circuits is relatively insignificant.

8. This is not to suggest that there is no incentive to maintain distribution-system reliability. There are potential regulatory sanctions for distribution-level outages, particularly large outages, at the state level. And utility management and personnel have personal stakes in maintaining reliability as they live where they work. But, critically, we do not have a way of knowing whether the confluence of all these influences yields efficient outcomes.

9. The contrast with the accepted approach to resource adequacy is striking. The ultimate objective of both resource adequacy (reliability) and transmission reliability is avoided firm load loss (outages). In the case of the former there is an objective target based on projected firm load loss, i.e., the venerable 1 event in 10 years standard. And, for the most part across the country, resources are procured/planned on a least-cost basis to meet that standard. In contrast, there is no objective target for transmission reliability and, necessarily, no least-cost procurement/planning for such a target.

10. The Commission has said that it will approve removal of existing requirements that: "... (1) provide little protection for Bulk-Power System reliability or (2) are redundant with other aspects of the Reliability Standards." Electric Reliability Organization Proposal to Retire Requirements in Reliability Standards, Order No. 788, 145 FERC ¶ 61,147 at P 18 (2013). Thus far requirements proposed for removal by NERC have been limited to those involving administrative tasks of little value and/or redundancy with other requirements. Electric Reliability Organization Proposal to Retire Requirements in Reliability Standards, Notice of Proposed Rulemaking, 143 FERC ¶ 61,251 (2013). There is no cost-benefit analysis for retaining/removing existing requirements.

11. E.g., Cost Effective Analysis Project 2007-11 Report, April 9, 2014, page 7: Standard approved despite industry view of "...little incremental reliability benefit from the standard...." There is a perhaps a glimmer of hope in NERC's recent filing at FERC to remove three "functional registration categories" (Interchange Authorities, Load-Serving Entities, and Purchasing-Selling Entities) because removal would pose "... little to no risk to the reliability of the Bulk-Power System...." North American Electric Reliability Corp., "Petition of the North American Electric Reliability Corporation for Approval of Risk-Based Registration Initiative Rules of Procedure Revisions," Docket No. RR15-4-000, page 4 (Dec. 11, 2014). There are 1,205 registrations that would thus be removed. NERC is to be commended for this initiative, but it does beg the question of why such categories ever existed and for so long.

12. In the Matter of the Application for Approval of Triennial Underground Infrastructure Improvement Projects Plan, Order No. 17697, Public Service Commission of the District of Columbia Public Service Commission, PP 152, 168 and 169 (November 12, 2014).

13. Exception should be noted for low probability-high impact risks. By their nature, such risks cannot be adequately assessed by their frequency in causing past outages. These risks, such as cybersecurity, pose existential threats to the grid and do not lend themselves to cost-benefit analysis.

Lead image © Diro | Dreamstime.com